Optimising your website’s crawlability is one of the most important elements of search engine optimisation (SEO), but it’s also one of the most overlooked. Crawling allows search engines to discover and understand your website, and without it, your pages won’t rank or be displayed in the SERPs.

At Embryo, we’re experts in the world of organic SEO, with a reputation for getting brands noticed online. In this blog, we’ll use our expertise to help you improve your site’s crawlability in just 10 simple steps, enabling search engines to better navigate your site and potentially boosting your rankings and online visibility.

Need some help optimising your SEO strategy? Get in touch to find out more about our SEO services.

1. Improve page loading speed

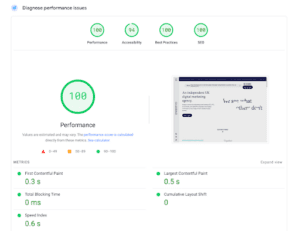

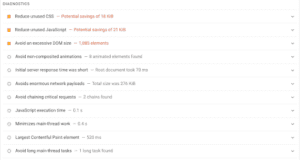

Page loading speed is a crucial part of the user experience, which is why websites that take a long time to load are less likely to be crawled and indexed. If you’re not sure what your page speed is, Google’s Page Speed Insights tool can be used to find out the current state of your website and gain actionable page speed improvements.

Some of the things you can do to improve your page speed include:

- Upgrading your server or hosting plan to ensure optimal performance

- Reducing the size of CSS, JavaScript, and HTML files

- Compressing images and ensuring the correct formats are used (JPEG for photographs, PNG for transparent graphics, etc.)

- Enabling browser caching so frequently accessed resources can be stored locally on users’ devices

- Reducing the number of redirects and removing any unnecessary ones

- Removing any unnecessary third-party scripts or plugins

2. Optimise core web vitals

Core Web Vitals are specific factors that Google considers essential in a user’s experience on your site, so making sure they’re optimised is crucial for your pages to be crawled properly.

The Core Web Vitals include:

- Largest Content Paint (LCP): Measures how long it takes a web page to load from the user’s point of view

- Interaction to Next Paint (INP): Measures how responsive a web page is

- Cumulative Layout Shift (CLS): Measures how stable a web page is

Google’s Page Speed Insights (mentioned above) can be used to identify issues related to Core Web Vitals and offers useful suggestions for improvement.

3. Strengthen the internal link structure

Crawl bots use internal links to discover and index new pages on your website, so having a strong internal linking structure is essential. You should aim to create a logical internal structure for your site so that it’s clear which pages are the most important and how different pages relate to each other.

Your main service or category pages should link to relevant subpages and vice versa, with related long-form guides and blog content linking back up to the pages at the top of the pyramid to lend them more authority.

Creating a strong internal linking structure can be tricky, and there are lots of best practices to bear in mind. Check out our internal linking best practices blog for more guidance on this topic.

4. Fix broken links

Broken links can prevent pages from being found and crawled, hindering your ability to create a strong internal linking structure. You’ll know a link is broken if clicking it gives you a 404 error message, AKA ‘page not found’.

You should check your site regularly to ensure you don’t have any broken links. There are a number of SEO tools you can use to identify broken links, including Google Search Console, Analytics, and Screaming Frog.

Once you’ve found broken links, you can either redirect them, update them, or remove them.

Claire Wilder, SEO Account Manager at Embryo, says:

‘Internal linking is key to a strong site structure, ensuring your website is easy to navigate to users and crawlable to search engine crawlers. By fixing broken links, you will enhance your customer experience, increase your authority, and increase your site’s crawlability.’

5. Complete a site audit

Conducting a site audit is a great way to check if your website is properly optimised for crawling and indexing. Start by checking the percentage of pages Google has indexed for your site – you can do this by looking at the Page Indexing Report in Google Search Console. It’s unlikely that your indexability rate will be 100%, but if it’s below 90%, this is a sign that your site has issues that need investigating.

You should also audit newly published pages to make sure they’re being crawled and indexed. Use Search Console’s URL Inspection Tool to check if all your new pages are showing up as expected. If not, you can request indexing on the page to guide the crawl bots in the right direction.

6. Remove low-quality or duplicate content

If there’s duplicate content on your site, this can confuse the crawl bots as they don’t know which version to index. An alert will sometimes be triggered in Google Search Console to let you know that Google is encountering more URLS than it thinks it should. However, sometimes you’ll have to check for duplicate URLs yourself, looking at crawl results for duplicate or missing tags, or URLs with extra characters.

Low-quality content can also negatively impact your site’s crawlability. Check your content to make sure it’s informative, original, and well-written. Any poor content with lots of spelling and grammar errors should be removed or rewritten as soon as possible.

Need a hand with adding high-quality content to your site? Our content marketing experts know exactly what it takes to write SEO-optimised content that ranks.

7. Submit your sitemap to Google

If you’ve recently made changes to your content and want Google to know about them straight away, you can submit a sitemap via Google Search Console. A sitemap is a file that tells the search engine what pages exist on your website, providing a blueprint that can be used to better find, crawl, and index your pages.

Sitemaps allow crawlers to find all of your pages at once, rather than having to follow several internal links to get to new content. This is particularly useful if you have lots of pages on your website, frequently add new pages or content, or your site does not have good internal linking.

8. Update robots.txt file

All websites should have a robots.txt file which provides directives to web crawlers, letting them know exactly how you want them to crawl your site. This is handy because you can limit which pages Google crawls and indexes (for example, you probably don’t want pages like directories and tags in Google’s directory). However, it can also negatively impact your site’s crawlability if you end up inadvertently blocking crawler access to pages that you want to be crawled.

It’s worth looking at your robots.txt file to make sure everything is as it should be. You can usually find it by entering https://www.websitedomain.com/robots.txt into your browser. An SEO agency like Embryo can help you with this if you’re not confident looking at it yourself or if you think you don’t have a robots.txt file on your website.

9. Check your canonicalisation

Canonical tags let the search engine know which page to give authority to when you have two or more pages that are similar or even duplicate. This is a great way to make sure duplicated or outdated versions of your pages are skipped by crawl bots, but there is the risk that rogue canonical tags can appear and cause the search engines to crawl and index the wrong pages. To prevent this problem, you can use Search Console’s URL Inspection Tool to scan for rogue tags and delete them.

10. Eliminate redirect chains and internal redirects

Redirects are often used to direct site visitors from one page to a newer, more relevant one and will inevitably be used as your site evolves. However, using them incorrectly could sabotage your site’s crawlability and indexability.

Redirect chains are the biggest issue that commonly occurs, which is when there’s more than one redirect between the link clicked on and the destination. Because this is not very user-friendly, Google considers it to be a negative signal and therefore is less likely to crawl and index your page.

Use a tool such as Screaming Frog or Redirect-Checker.org to check your site’s redirects and make sure any redirect chains are fixed or eliminated.

Increase your online visibility with Embryo

Hopefully by now you understand the importance of improving your site’s crawlability to boost your rankings and online visibility. However, implementing the above steps yourself can sometimes be tricky, especially if you’re not an SEO expert.

Whether you need a hand actioning technical SEO changes on your website or some help to create the perfect organic SEO strategy, the team at Embryo will be happy to help.